Richard Cook and David Woods on successful anomaly response

O'Reilly Radar Podcast: SNAFU Catchers, knowing how things work, and the proper response to system discrepancies.

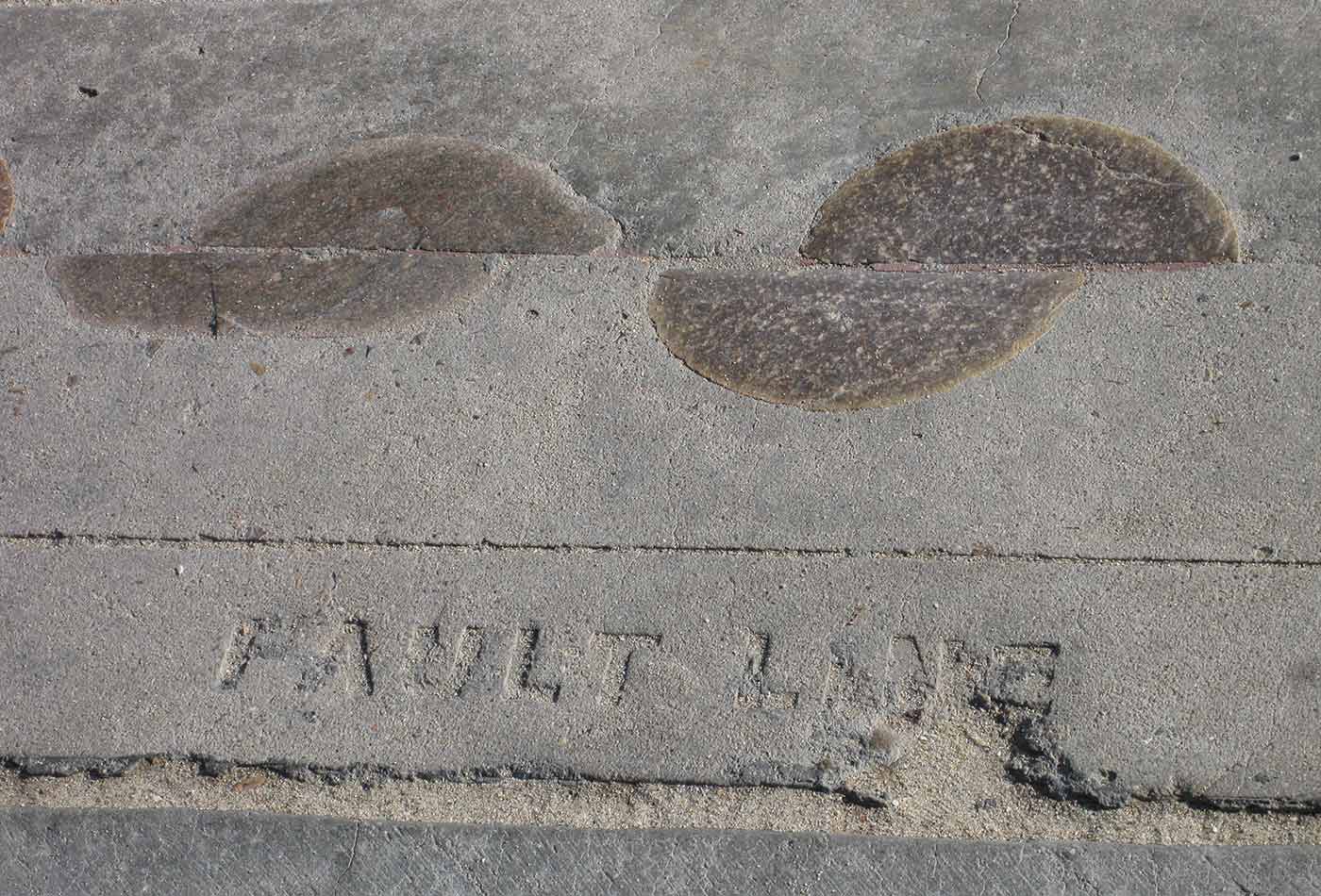

Fault line. (source: Lisa Andres on Flickr)

Fault line. (source: Lisa Andres on Flickr)

In this week’s episode, O’Reilly’s Mac Slocum sits down with Richard Cook and David Woods. Cook is a physician, researcher, and educator, who is currently a research scientist in the Department of Integrated Systems Engineering at Ohio State University, and emeritus professor of health care systems safety at Sweden’s KTH. Woods also is a professor at Ohio State University and is leading the Initiative on Complexity in Natural, Social, and Engineered Systems, and he’s the co-director of Ohio State University’s Cognitive Systems Engineering Laboratory. They chat about SNAFU Catchers; anomaly response; and the importance of not only understanding how things fail, but how things normally work.

Here are a few highlights:

Catching situations abnormal

Cook:

We’re trying to understand how Internet-facing businesses manage to handle all the various problems, difficulties, and opportunities that come along. Our goal is to understand how to support people in that kind of work. It’s a fast changing world, mostly that appears on the surface to be smoothly functioning, but in fact, as people who work in the industry know, is always struggling with different kinds of breakdowns, and things that don’t work correctly, and obstacles that have to be addressed. Snafu Catchers refers to the idea that people are constantly working to collect, and respond to, all the different kinds of things that foul up the system, and that that’s the normal situation, not the abnormal one.

Woods:

[SNAFU] is a coinage from the grunts in World War II on our side, on the winning side. Situation normal, so the normal situation is all fucked up, right? That the pristine, smooth work is designed, follow the plan, put in automation, everything is great, isn’t really the way things work in the real world. It appears that way from a distance, but on the ground, there are gaps, uncertainties, conflicts, and trade offs. Those are normal—in fact, they’re essential. They’re part of this universe and the way things work. What that means is, there is often a breakdown, a limit in terms of how much adaptive capability is built into the system, and we have to add to that. Because surprise will happen, exceptions will happen, anomalies will happen. Where does that extra capacity to adapt to surprise come from? That’s what we’re trying to understand, and focus on, not the SNAFU—that’s just normal. We’re focusing on the catching: what are the processes, abilities, and capabilities of the teams, groups, and organizational practices that help you catch SNAFU’s. That’s about the anticipation and preparation, so you can respond quickly and directly when the surprise occurs.

Know how things work, not just how they fail

Cook:

There’s an old surgical saying that, ‘Good results come from experience, and experience comes from bad results.’ That’s probably true in this industry as well. We learn from experience by having difficulties and solving those sorts of problems. We live in an environment in which people are doing this as apprenticeships very early on in their life, and the apprenticeship gives them opportunities to experience different kinds of failure. Having those experiences tells them something about the kinds of activities that they should perform, once they sense a failure is occurring. Also, some of the different kinds of things that they can do to respond to different kinds of failures. Most of what happens in this, is a combination of understanding how the system is working, and understanding what’s going on that suggests that it’s not working in the right sort of way. You need two kinds of knowledge to be able to do this. Not just knowledge of how things fail, but also knowledge of how things normally work.

No anomaly is too small to ignore

Woods:

I noticed that what’s interesting is, you have to have a pretty good model of how it’s supposed to work. Then you start getting suspicious. Things don’t quite seem right. These are the early signals, sometimes called weak signals. These are easy to discount away. One of the things you see, and this happened in [NASA] mission control, for example, in its heyday, all discrepancies were anomalies until proven otherwise. That was the cultural ethos of mission control. When you lose that, you see people discounting, ‘Oh, that discrepancy isn’t going to really matter. I’ve got to get this other stuff done,’ or, ‘If I foul it up, some other things will start happening.’

What we see in successful anomaly response is this early ability to notice something starting to go wrong, and it is not definitive, right? If it was definitive, then it would cross some threshold, it would activate some response, it would pull other resources in to deal with it, because you don’t want it to get out of control. The preparation for, and success at, handling these things is to get started early. The failure mode is, you’re slow and stale—you let it cook too long before you start to react. You can be slow and stale, and the cascade can get away from you, you lose control. When teams or organizations are effective at this, they notice things are slightly out, and then pursue it. Dig a little deeper, follow up, test it, bring some other people to bare with different or complimentary expertise. The don’t give up real quick and say, ‘That discrepancy is just noise and can be ignored.’ Now, most of the time, those discrepancies might probably be noise, right? Isn’t worth the effort. But sometimes those are the beginnings of something that’s going to threaten to cascade out of control.