Introducing RLlib: A composable and scalable reinforcement learning library

RISE Lab’s Ray platform adds libraries for reinforcement learning and hyperparameter tuning.

Industrial robots (source: Mixabest on Wikimedia Commons)

Industrial robots (source: Mixabest on Wikimedia Commons)

In a previous post, I outlined emerging applications of reinforcement learning (RL) in industry. I began by listing a few challenges facing anyone wanting to apply RL, including the need for large amounts of data, and the difficulty of reproducing research results and deriving the error estimates needed for mission-critical applications. Nevertheless, the success of RL in certain domains has been the subject of much media coverage. This has sparked interest, and companies are beginning to explore some of the use cases and applications I described in my earlier post. Many tasks and professions, including software development, are poised to incorporate some forms of AI-powered automation. In this post, I’ll describe how RISE Lab’s Ray platform continues to mature and evolve just as companies are examining use cases for RL.

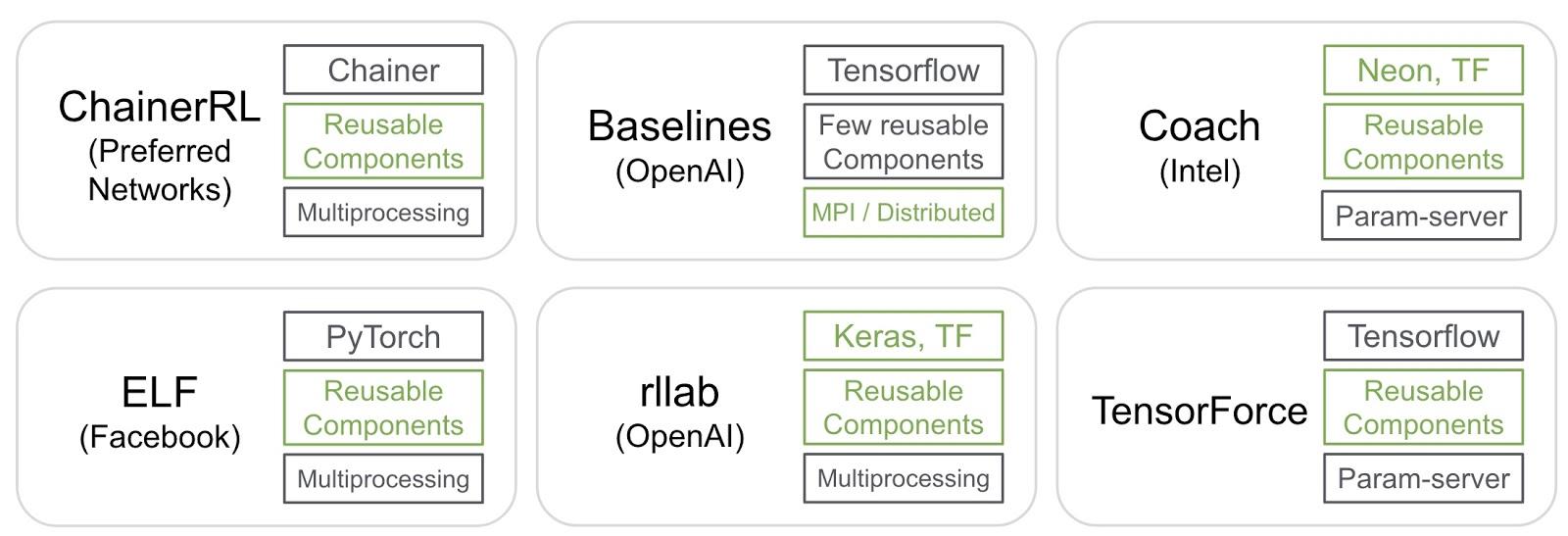

Assuming one has identified suitable use cases, how does one get started with RL? Most companies that are thinking of using RL for pilot projects will want to take advantage of existing libraries.

There are several open source projects that one can use to get started. From a technical perspective, there are a few things to keep in mind when considering a library for RL:

- Support for existing machine learning libraries. Because RL typically uses gradient-based or evolutionary algorithms to learn and fit policy functions, you will want it to support your favorite library (TensorFlow, Keras, PyTorch, etc.).

- Scalability. RL is computationally intensive, and having the option to run in a distributed fashion becomes important as you begin using it in key applications.

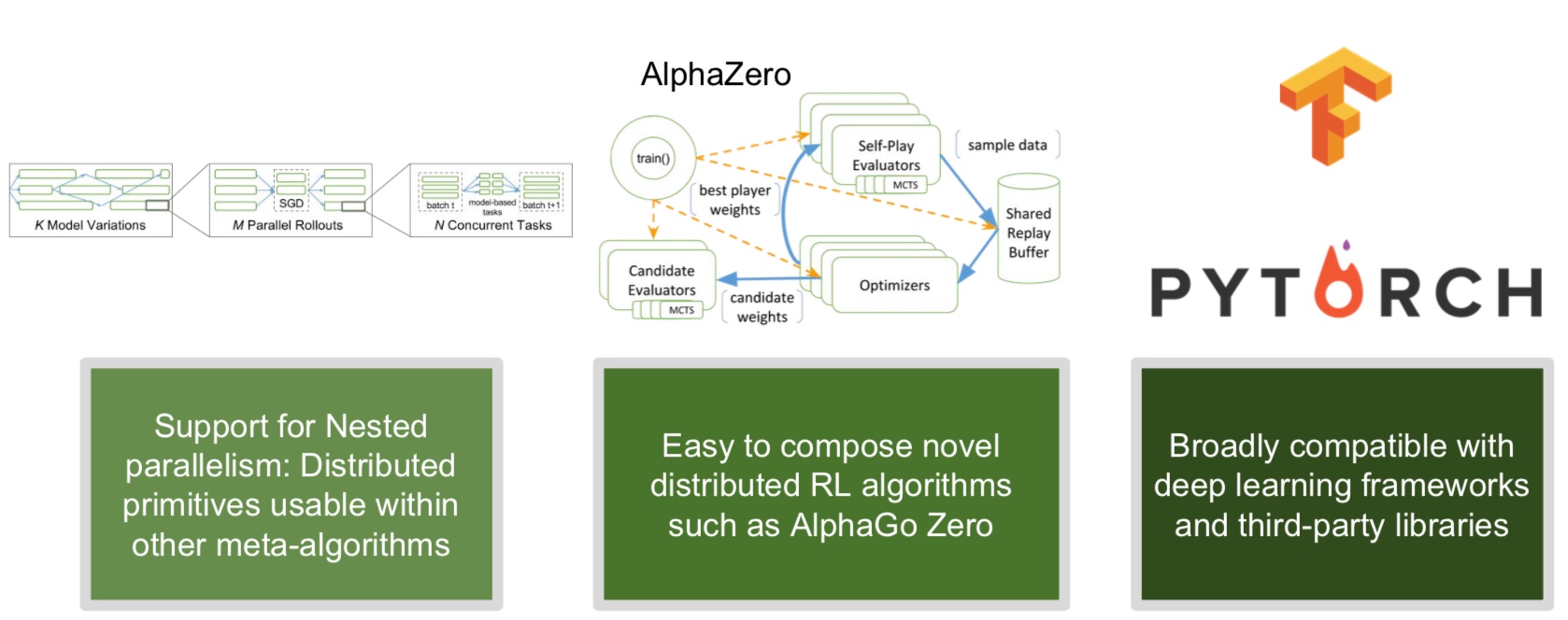

- Composability. RL algorithms typically involve simulations and many other components. You will want a library that lets you reuse components of RL algorithms (such as policy graphs, rollouts), that is compatible with multiple deep learning frameworks, and that provides composable distributed execution primitives (nested parallelism).

Introducing Ray RLlib

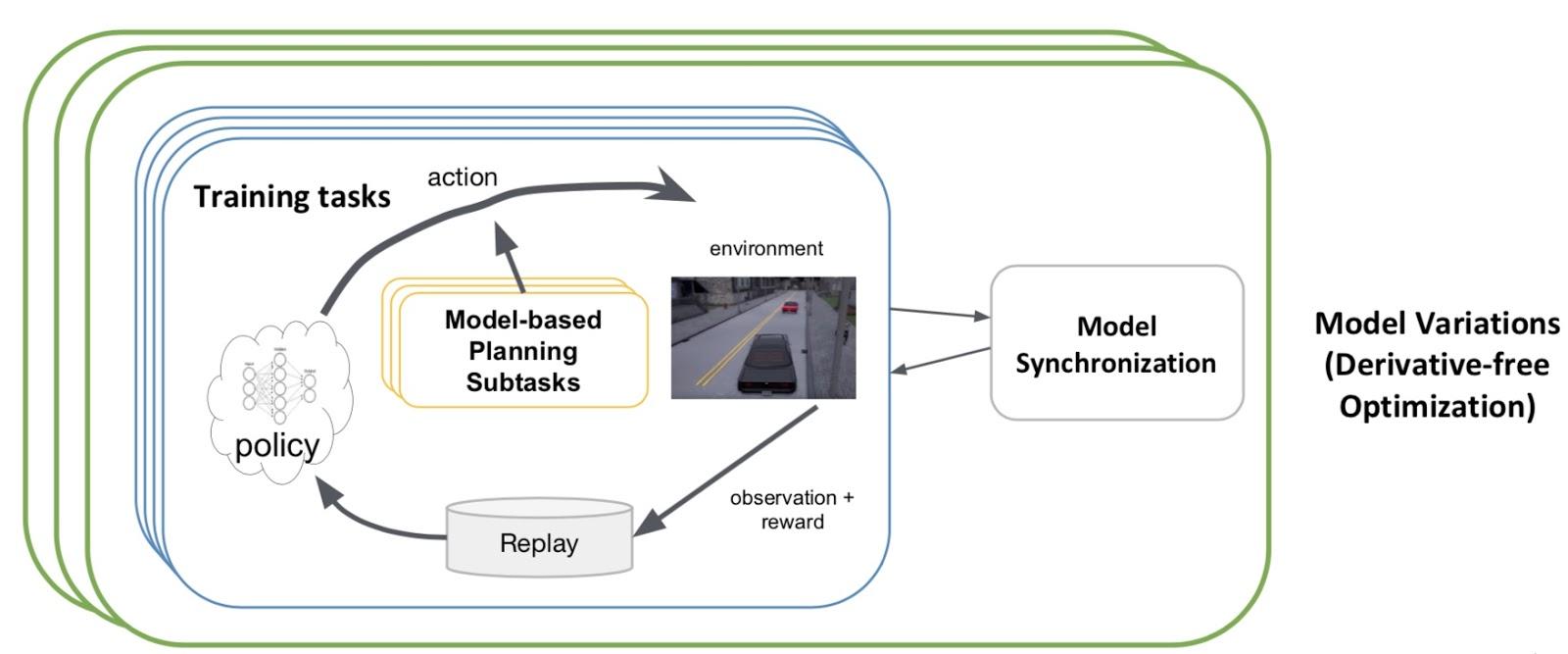

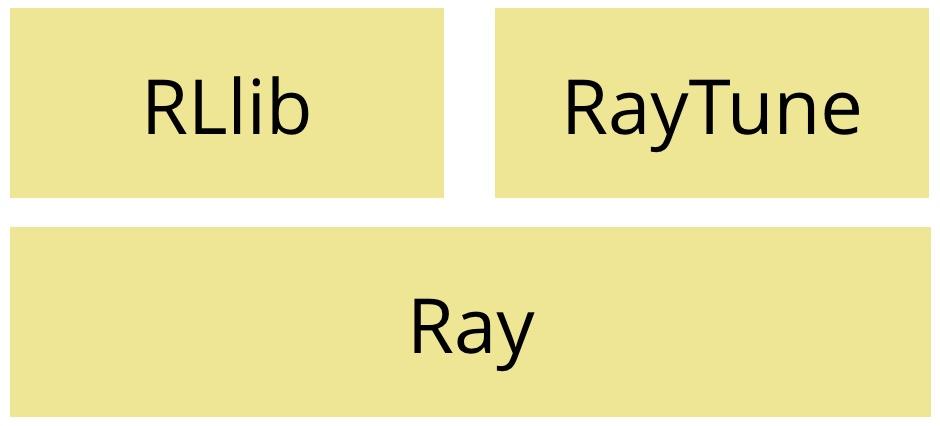

Ray is a distributed execution platform (from UC Berkeley’s RISE Lab) aimed at emerging AI applications, including those that rely on RL. RISE Lab recently released RLlib, a scalable and composable RL library built on top of Ray:

RLlib is designed to support multiple deep learning frameworks (currently TensorFlow and PyTorch) and is accessible through a simple Python API. It currently ships with the following popular RL algorithms (more to follow):

- Proximal Policy Optimization (PPO) which is a proximal variant of TRPO.

- The Asynchronous Advantage Actor-Critic (A3C).

- Deep Q Networks (DQN).

- Evolution Strategies, as described in this paper.

It’s important to note that there is no dominant pattern for computing and composing RL algorithms and components. As such, we need a library that can take advantage of parallelism at multiple levels and physical devices. RLlib is an open source library for the scalable implementation of algorithms that connect the evolving set of components used in RL applications. In particular, RLlib enables rapid development because it makes it easy to build scalable RL algorithms through the reuse and assembly of existing implementations (“parallelism encapsulation”). RLlib also lets developers use neural networks created with several popular deep learning frameworks, and it integrates with popular third-party simulators.

Software for machine learning needs to run efficiently on a variety of hardware configurations, both on-premise and on public clouds. Ray and RLlib are designed to deliver fast training times on a single multi-core node or in a distributed fashion, and these software tools provide efficient performance on heterogeneous hardware (whatever the ratio of CPUs to GPUs might be).

Examples: Text summarization and AlphaGo Zero

The best way to get started is to apply RL on some of your existing data sets. To that end, a relatively recent application of RL is in text summarization. Here’s a toy example to try—use RLlib to summarize unstructured text (note that this is not a production-grade model):

# Complete notebook available here: https://goo.gl/n6f43h

document = """Insert your sample text here

"""

summary = summarization.summarize(agent, document)

print("Original document length is {}".format(len(document)))

print("Summary length is {}".format(len(summary)))

Text summarization is just one of several possible applications. A recent RISE Lab paper provides other examples, including an implementation of the main algorithm used in AlphaGo Zero in about 70 lines of RLlib pseudocode.

Hyperparameter tuning with RayTune

Another common example involves model building. Data scientists spend a fair amount of time conducting experiments, many of which involve tuning parameters for their favorite machine learning algorithm. As deep learning (and RL) become more popular, data scientists will need software tools for efficient hyperparameter tuning and other forms of experimentation and simulation. RayTune is a new distributed, hyperparameter search framework for deep learning and RL. It is built on top of Ray and is closely integrated with RLlib. RayTune is based on grid search and uses ideas from early stopping, including the Median Stopping Rule and HyperBand.

There is a growing list of open source software tools available to companies wanting to explore deep learning and RL. We are in empirical era, and we need tools that enable quick experiments in parallel, while letting us take advantage of popular software libraries, algorithms, and components. Ray just added two libraries that will let companies experiment with reinforcement learning and also efficiently search through the space of neural network architectures.

Reinforcement learning applications involve multiple components, each of which presents opportunities for distributed computation. Ray RLlib adopts a programming model that enables the easy composition and reuse of components, and takes advantage of parallelism at multiple levels and physical devices. Over the short term, RISE Lab plans to add more RL algorithms, APIs for integration with online serving, support for multi-agent scenarios, and an expanded set of optimization strategies.

Related resources:

- ”Practical applications of reinforcement learning in industry”

- “Bringing gaming to life with AI and deep learning”: How reinforcement learning opens the door for the use of training rather than programming in game development.

- Ray: A distributed execution framework for reinforcement learning applications: A 2017 Artificial Intelligence Conference presentation by Ion Stoica

- Building reinforcement learning applications with Ray: A session at the AI Conference in New York City, April 29-May 2, 2018.

- “Neuroevolution: A different kind of deep learning”

- “Why continuous learning is key to AI”