Artificial intelligence?

AI scares us because it could be as inhuman as humans.

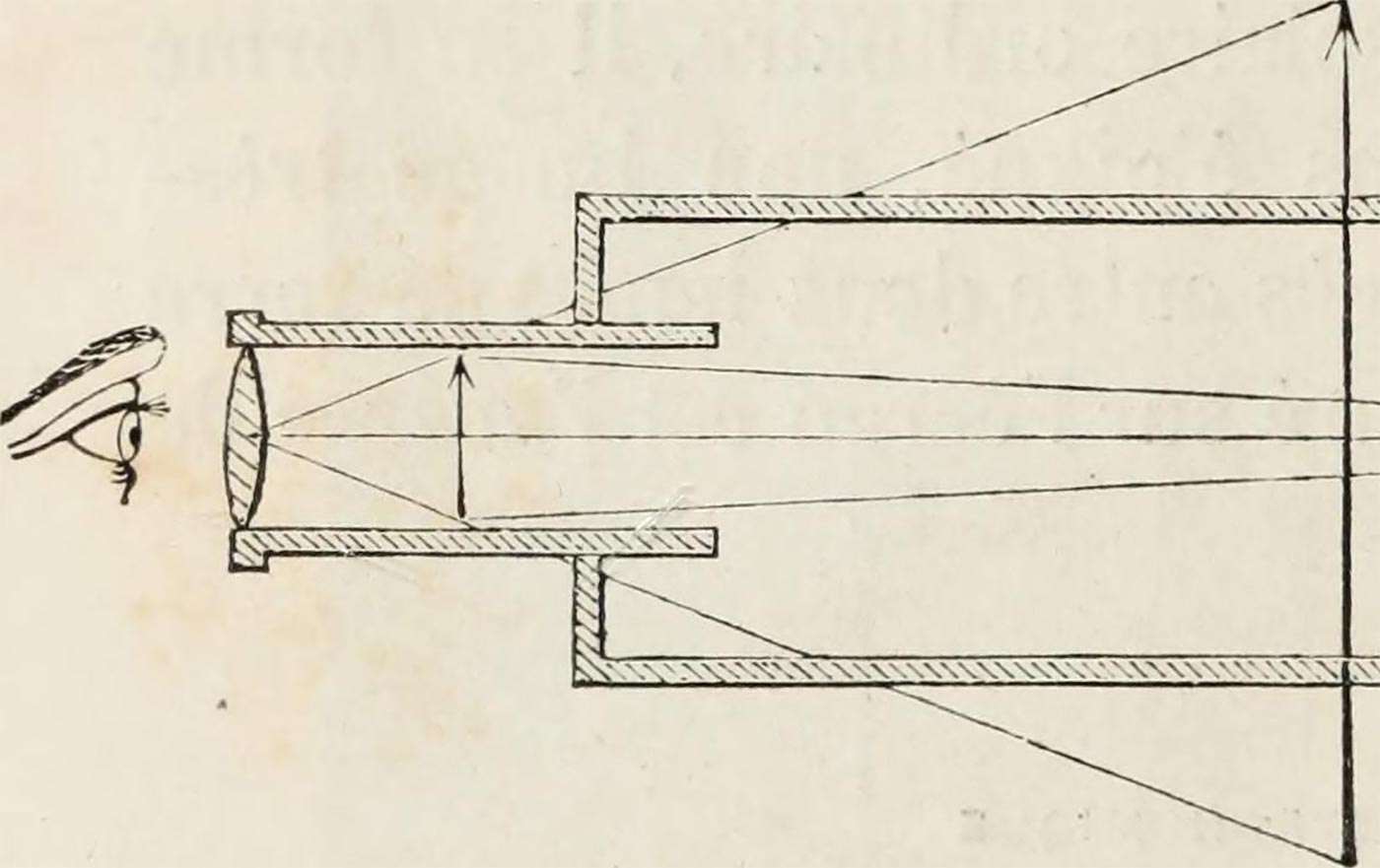

Image from page 285 of "La verrerie depuis les temps les plus reculés jusqu'à nos jours" (1869). (source: Internet Archive on Flickr)

Image from page 285 of "La verrerie depuis les temps les plus reculés jusqu'à nos jours" (1869). (source: Internet Archive on Flickr)

Elon Musk started a trend. Ever since he warned us about artificial intelligence, all sorts of people have been jumping on the bandwagon, including Stephen Hawking and Bill Gates.

Although I believe we’ve entered the age of postmodern computing, when we don’t trust our software, and write software that doesn’t trust us, I’m not particularly concerned about AI. AI will be built in an era of distrust, and that’s good. But there are some bigger issues here that have nothing to do with distrust.

What do we mean by “artificial intelligence”? We like to point to the Turing test; but the Turing test includes an all-important Easter Egg: when someone asks Turing’s hypothetical computer to do some arithmetic, the answer it returns is incorrect. An AI might be a cold calculating engine, but if it’s going to imitate human intelligence, it has to make mistakes. Not only can it make mistakes, it can (indeed, must be) be deceptive, misleading, evasive, and arrogant if the situation calls for it.

That’s a problem in itself. Turing’s test doesn’t really get us anywhere. It holds up a mirror: if a machine looks like us (including mistakes and misdirections), we can call it artificially intelligent. That begs the question of what “intelligence” is. We still don’t really know. Is it the ability to perform well on Jeopardy? Is it the ability to win chess matches? These accomplishments help us to define what intelligence isn’t: it’s certainly not the ability to win at chess or Jeopardy, or even to recognize faces or make recommendations. But they don’t help us to determine what intelligence actually is. And if we don’t know what constitutes human intelligence, why are we even talking about artificial intelligence?

So, perhaps by “intelligence” we mean “understanding.” After writing Artificial Intelligence: Summoning the Demon, I had a wonderful email conversation with Lee Gomes. He pointed me to the Chinese Room Argument proposed by the philosopher John Searle. It goes like this: imagine a computer that can “translate” Chinese into English. Then put a human who does not know Chinese in a room, with access to the translation program’s source code. Give the human some Chinese to translate, and let him do the translation by executing the code by hand. Can we say that the human “understands” Chinese? Clearly, we’d say “no.” And if not, can we say that the computer “understands” Chinese?

I confess that I haven’t read the significant academic discussion of this experiment. One thing stands out, though: while we wouldn’t say that the human (or the computer) “understands” Chinese, we would certainly say that both “translate” Chinese. And that’s an important distinction. For humans, there is a connection between understanding and the ability to translate. For computers, there isn’t. Computers spend much of their time translating. I wrote a program the other day that displayed successive Fourier series approximations to a square wave. To execute that program, my laptop had to translate that code into machine language instructions. That’s not a big deal: it happens whenever anyone writes a program. But I would have been shocked if the computer said, “Hey, anyone can do a square wave, why don’t you try a sawtooth instead?” That would require understanding. Computers are getting better at translating natural language, but we’d never say that a computer “understands language,” any more than we’d say that a computer that wins Jeopardy is “intelligent.” Computer translation is at best a useful assistive device. It’s instrumental; it’s a tool. It’s not understanding and, just as with intelligence, it’s not really clear what understanding means.

When we think about AI, I suspect that what we’re really after is some notion of “consciousness.” And what scares us is that, if consciousness is somehow embodied in a machine, we don’t know where, or what, that consciousness is. But if intelligence and understanding are slippery concepts, consciousness is even more so. I know I’m conscious, but I don’t really know anything about anyone else’s consciousness, whether human or otherwise. We have no insight into what’s going on inside someone else’s head. Indeed, if some theorists are right, we’re all just characters in a massive simulation run by a hyper-intelligent civilization. If that’s true, are any of us conscious? We’re all just AIs, and rather limited ones.

At the BioFabricate summit in New York, one attendee (I don’t remember who) made an important point. There are a half dozen or so fundamental properties of living systems: they have boundaries, they obtain and use energy, grow, they reproduce, and they respond to their environment. Individually, we can build artificial systems with each of these properties, and without too much trouble, we could probably engineer all the properties into a single artificial system. But if we did so, would we consider the result “life”? His bet (and mine) is “no.” Whether artificial or not, life is ultimately something we don’t understand, as is intelligence. Attempting to create synthetic life might help us to define what life is, and will certainly help us think about what life isn’t. Similarly, the drive to create AI may help us to define intelligence, but it will certainly make it clear what intelligence isn’t.

That isn’t to say that we won’t create something dangerous because we don’t know what we’re trying to create. But burdening developers with attempts to control something that we don’t understand is a wasted effort: that would certainly slow down research, it would certainly slow down our understanding of what we really mean by “intelligence,” and it would almost certainly leave us more vulnerable to being blind-sided by something that is truly dangerous. Whatever artificial intelligence may mean, I place more trust in machine learning researchers such as Andrew Ng and Yann LeCun, who think that anything that might reasonably be called “artificial intelligence” is decades away, than in the opinions of well-intentioned amateurs.

While it’s nice and scary to talk about autonomous robots killing people, the problem really isn’t computer volition or AI; it’s human volition. Yes, computers are getting really good at recognizing faces. Whether or not they’re as good as regular humans, or likely to become as good, isn’t really the point. (Research results as reported by the press are generally fairly significantly overstated.) A machine that only recognizes faces, no matter how accurately, couldn’t be described as “intelligent,” whatever that means. But there’s the rub: to do anything interesting, a face recognition system needs to be connected to something that makes decisions and takes actions, whether it’s sending spam email or launching weaponized drone aircraft — and that other system, whether it’s a human or an AI, will reflect old-fashioned human stupidity. There are plenty of reasons to find this scary. Humans don’t understand risk. Humans don’t understand false positives (or anything about the “receiver operating characteristic”). Humans very certainly don’t understand reasoning about probability.

For example, what would happen if an analysis run by the FBI coupled people who worked in the World Trade Center but didn’t show up on 9/11 (I know several) with religious preferences, ethnic preferences, sexual orientation, and other factors? We would almost certainly spot some “terrorists” who had nothing to do with the crime. How would they prove their innocence against an “artificial intelligence” engine? As Cathy O’Neil has pointed out, one of the biggest dangers we face is using data to put a veneer of “science” on plain old prejudice. Going back to the robot that decides it needs to destroy humanity: if such a robot should ever exist, its decision will almost certainly have been enabled by some human. After all, humans have come very close to destroying humanity, and will no doubt do so again. Should that happen, we will no doubt blame it on AI, but the real culprit will be ourselves.

If we don’t need to fear artificial intelligence, what should we fear?

- As someone on Twitter said, in response to Hawking, “I’m not concerned about artificial intelligence. I’m more concerned with natural stupidity.” Yes, absolutely.

- We should fear losing AI as a tool to mitigate or repair the damage we are doing to our world now, not the damage that AI might do to our world later.

- We should fear research that is being done in the dark, in locked labs, rather than research that is being done in the open. Research done in the open can be questioned, examined, and understood; research that’s out of sight will always be out of control. The Future of Life Institute that Elon Musk has funded may be useful; but that institute will never see the really dangerous research.

But fear itself doesn’t help. Here’s what we really need to watch: we need to understand risk, probability, and statistics so that we can make reasonable decisions about the world we live in. We need to understand the consequences of trusting flawed, unreliable systems (whether they claim to be “intelligent” or not). We need to be aware of our prejudices and bigotries, and make sure that we don’t build systems that grant our prejudices the appearance of science. That’s ultimately why AI scares us: we worry that it will be as inhuman as humans all too frequently are. If we are aware of our own flaws, and can honestly admit and discuss them, we’ll be OK.

I don’t believe AI systems will ever do anything significant apart from human volition. We might use AI to hide from our responsibility for our mistakes, but they will most certainly be our mistakes.