A growing number of applications are being built with Spark

Many more companies want to highlight how they're using Apache Spark in production.

Manitobah (source: BiblioArchives)

Manitobah (source: BiblioArchives)

One of the trends we’re following closely at Strata is the emergence of vertical applications. As components for creating large-scale data infrastructures enter their early stages of maturation, companies are focusing on solving data problems in specific industries rather than building tools from scratch. Virtually all of these components are open source and have contributors across many companies. Organizations are also sharing best practices for building big data applications, through blog posts, white papers, and presentations at conferences like Strata.

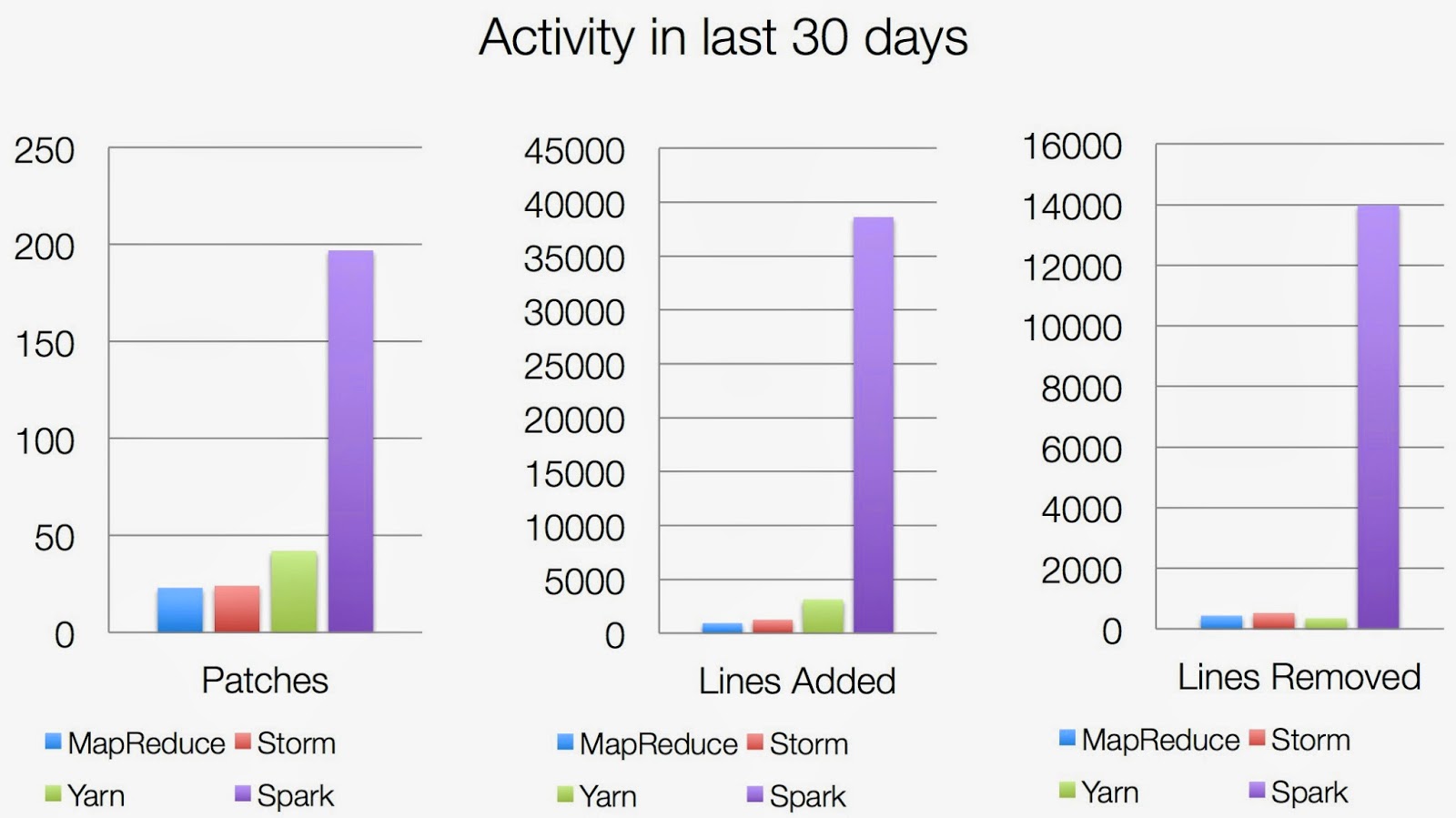

These trends are particularly apparent in a set of technologies that originated from UC Berkeley’s AMPLab: the number of companies that are using (or plan to use) Spark in production1 has exploded over the last year. The surge in popularity of the Apache Spark ecosystem stems from the maturation of its individual open source components and the growing community of users. The tight integration of high-performance tools that address different problems and workloads, coupled with a simple programming interface (in Python, Java, Scala), make Spark one of the most popular projects in big data. The charts below show the amount of active development in Spark:

For the second year in a row, I’ve had the privilege of serving on the program committee for the Spark Summit. I’d like to highlight a few areas where Apache Spark is making inroads. I’ll focus on proposals2 from companies building applications on top of Spark.

Real-time processing: This year’s conference saw many more proposals from companies that have deployed Spark Streaming. The most common usage remains real-time data processing and scoring of machine-learning models. There are also sessions on tools that expand the capability and appeal of Spark Streaming (StreamSQL and a tool for CEP).

Data integration3 and Data processing: At the heart of many big data applications are robust data fusion systems. This year’s Spark Summit has several sessions on how companies integrate data sources and make the results usable for services and applications. Nube Technologies is introducing a fuzzy matching engine for handling noisy data, a new library called Geotrellis adds geospatial data processing to Spark, and a new library for parallel, distributed, real-time image processing will be unveiled.

Advanced analytics: MLlib, the default machine-learning in the Spark ecosystem, is already used in production deployments. This year’s conference includes sessions on tools that enhance MLlib including a distributed implementation of Random Forest, matrix factorization, entity recognition in NLP, and a new library that enables analytics on tabular data (distributed data frames from Adatao).

Beyond these new tools for analytics, there are sessions on how Spark enables large-scale, real-time recommendations (Spotify, Graphflow, Zynga), text mining (IBM, URX), and detection of malicious behavior (FSecure).

Applications: I’ve written about how Spark is being used in genomics. Spark is also starting to get deployed in applications that generate and consume machine-generated data (including wearables and the Internet of Things). Guavus has recently begun to move parts of their widely deployed application for processing large amounts of event data to Spark.

There are many more interesting applications4 that will be featured at the upcoming Spark Summit. This community event is fast becoming a showcase of next-generation big data applications. As Spark continues to mature, this shift in focus from tools to case studies and applications will accelerate even more. [NOTE: If you’re interested in attending the Spark Summit, use the following 10% discount code.]

New release: This week marked a major milestone for Apache Spark with the release of the 1.0 version. The release notes have a detailed list of enhancements and improvements, but here are a few that should appeal to the data community: a new feature called Spark SQL provides schema-aware data modeling and SQL language support in Spark, MLLib has expanded to include several new algorithms, and there are major updates to both Spark Streaming and GraphX.

(0) Full disclosure: I am an advisor to Databricks – a startup commercializing Apache Spark.

(1) I’ve been on the program committee for all the Spark Summits, and I’m the Content Director of Strata. The number of proposals from companies using Spark has grown considerably over the last year.

(2) There are also many sessions on core technologies in the the Apache Spark ecosystem.

(3) This is an important topic that’s frequently underestimated by engineering managers. For more on this subject, check Jay Kreps’ recent Strata webcast on data integration.

(4) Databricks is in the early stages of a new program (Certified on Spark) to certify applications that are compatible with Apache Spark.