When models are everywhere

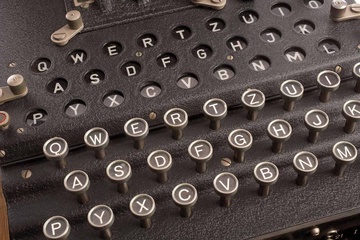

Rotor cipher machine (source: Pixabay)

Rotor cipher machine (source: Pixabay)

You probably interact with fifty to a hundred machine learning products every day, from your social media feeds and YouTube recommendations to your email spam filter and the updates that the New York Times, CNN, or Fox News decide to push, not to mention the hidden models that place ads on the websites you visit, and that redesign your ‘experience’ on the fly. Not all models are created equal, however: they operate on different principles, and impact us as individuals and communities in different ways. They differ fundamentally from each other along dimensions such as alignment of incentives between stakeholders, “creep factor”, and the nature of how their feedback loops operate.

To understand the menagerie of models that are fundamentally altering our individual and shared realities, we need to build a typology, a classification of their effects and impacts. This typology is based on concepts such as the nature of different feedback loops in currently deployed algorithms, and how incentives can be aligned and misaligned between various stakeholders. Let’s start by looking at how models impact us.

SCREENS, FEEDBACK, AND “THE ENTERTAINMENT”

Many of the models you interact with are mediated through screens, and there’s no shortage of news about how many of us spend our lives glued to them. Children, parents, friends, relatives: we are all subject to screens, ranging from screens that fit on our wrist to screens that occupy entire walls. You may have seen loved ones sitting on the couch, watching a smart TV while playing a game on an iPad, texting on their smartphones, and receiving update after update on their Apple Watch, a kaleidoscope of screens of decreasing size. We even have apps to monitor and limit screen time. Limiting screen time has been an option on iPhones for over a year, and there are apps for iPhones and Android that not only monitor your childrens’ screen time, they let you reward them for doing their chores or their homework by giving them more. Screen time has been gamified: where are you on the leaderboard?

We shouldn’t be surprised. In the 70s, TV wasn’t called the “boob tube” for nothing. In David Foster Wallace’s novel Infinite Jest, there is a video tape known as “The Entertainment.” When somebody watches it, they are unable to look away, no longer caring about food, shelter or sleep, and they eventually enter a state of immobile, catatonic bliss. There’s a telling sequence in which more and more people approach those watching it to see what all the hullabaloo is about and also end up with their eyes glued to the screen.

Infinite Jest was published in 1996, just as the modern Web was coming into being. It predates recommendation engines, social media, engagement metrics, and the recent explosion of AI, but not by much. And like a lot of near-future SciFi, it’s remarkably prescient. It’s a shock to read a novel about the future, and realize that you’re living that future.

“The Entertainment” is not the result of algorithms, business incentives and product managers optimizing for engagement metrics. There’s no Facebook, Twitter, or even a Web; it’s a curious relic of the 80s and 90s that The Entertainment appeared in the form of a VHS tape, rather than an app. “The Entertainment” is a tale of the webs that connect form, content and addiction, along with the societal networks and feedback loops that keep us glued to our screens. David Foster Wallace had the general structure of the user–product interaction correct. That loop isn’t new, of course; it was well-known to TV network executives. Television only lacked the immediate feedback that comes with clicks, tracking cookies, tracking pixels, online experimentation, machine learning, and “agile” product cycles.

Does “The Entertainment” show people what they want to see? In a highly specific, short-term sense, possibly. In a long-term sense, definitely not. Regardless of how we think of ourselves, humans aren’t terribly good at trading off short-term stimulus against long-term benefits. That’s something we’re all familiar with: we’d rather eat bacon than vegetables, we’d rather watch Game of Thrones than do homework, and so on. Short-term stimulus is addictive: maybe not as addictive as “The Entertainment,” but addictive nonetheless.

YOUTUBE, CONSPIRACY, AND OPTIMIZATION

We’ve seen the same argument play out on YouTube: when their recommendation algorithm was optimized for how long users would keep their eyeballs on YouTube, resulting in more polarizing conspiracy videos being shown, we were told that YouTube was showing people what they wanted to see. This is a subtle sleight-of-mind, and it’s also wrong. As Zeynep Tufekci points out, this is analogous to an automated school cafeteria loading plates with fatty, salty, and sweet food because it has figured out that’s what keeps kids in the cafeteria the longest. What’s also interesting is that YouTube never wrote ‘Show more polarizing conspiracy videos’ into their algorithm: that was merely a result of the optimization process. YouTube’s algorithm was measuring what kept viewers there the longest, not what they wanted to see, and feeding them more of the same. Like sugar and fat, conspiracy videos proved to be addictive, regardless of the viewer’s position on any given cause. If “The Entertainment” were posted to YouTube, it would be highly recommended on the platform: viewers can’t leave. It’s the ultimate virtual roach trap. If that’s not engagement, what is? But it’s clearly not what viewers want–viewers certainly don’t want to forget about food and shelter, not even for a great TV show.

One result of this is that in 2016, out of 1,000 videos recommended by YouTube after an equal number of searches for “Trump” and “Clinton”, 86% of recommended videos favored the Republican nominee. In retrospect, the recommendation algorithm’s “logic” is inescapable. If you’re a Democrat, Trump videos made you mad. If you’re a Republican, Trump’s content was designed to make you mad. And anger and polarization are bankable commodities that drive the feedback loop in an engagement-driven world.

Another result is the weirdness encountered in certain parts of kids’ Youtube, such as “surprise Eggs videos [that] depict, often at excruciating length, the process of unwrapping Kinder and other egg toys.” Some of these have up to 66 million views. These are all results of business incentives for both YouTube and its content providers, the metrics used to measure success and the power of feedback loops on an individual level and in society, as manifested in modern big tech recommender systems.

It’s important to note that the incentives of YouTube, its advertisers, and its users are often misaligned, in that users searching for “real news” continually end up being shunted down conspiracy theory and “fake news” rabbit holes due to the mixed incentive structure of the advertising-based business model. Such mixed incentives were even noted by Google founders Sergey Brin and Larry Page in their 1998 paper The Anatomy of a Large-Scale Hypertextual Web Search Engine, which details their first implementation of the Google Search algorithm. In Appendix A, aptly titled ‘Advertising and Mixed Motives’, Brin and Page state explicitly that “the goals of the advertising business model do not always correspond to providing quality search to users” and “we expect that advertising funded search engines will be inherently biased towards the advertisers and away from the needs of the consumers.” *Gulp*. Also note that they refer to the user of Search here as a consumer.

FEEDBACK LOOPS, FILTER BUBBLES, ECHO CHAMBERS, AND INCENTIVE STRUCTURES

YouTube is a case study on the impact of feedback loops on the individual: if I watch something for a certain amount of time, YouTube will recommend similar things to me, for some definition of similar (similarity is defined by broader societal interactions with content), resulting in what we now call “filter bubbles”, a term coined by internet activist Eli Pariser in his 2011 book The Filter Bubble: What the Internet Is Hiding from You. Netflix’s algorithm has historically resulted in similar types of recommendations and filter bubbles (although business incentives are now forcing them to surface more of their own content).

Twitter and Facebook have feedback loops that operate slightly differently, because every user can be both a content provider and a consumer, and the recommendations arise from a network of multi-sided interactions. If I’m sharing content and liking content, the respective algorithms will show me more that is similar to both, resulting in what we call “echo chambers.” These echo chambers represent a different kind of feedback that doesn’t just involve a single user: it’s a feedback loop that involves the user and their connections. The network that directly impacts me is that of my connections and the people I follow.

We don’t have to look far to see other feedback loops offline. There are runaway feedback loops in “predictive policing”, whereby more police are sent to neighborhoods with higher “reported & predicted crime,” resulting in more police being sent there and more reports of crime and so on. Due to the information and power asymmetries at play here, along with how such feedback loops discriminate against specific socioeconomic classes, projects such as White Collar Crime Risk Zones, which maps predictions of white collar crime, are important. An application that hospitals use to screen for patients with high-risk conditions that require special care wasn’t recommending that care for black patients as often; white patients spend more on health care, making their conditions appear to be more serious. While these applications look completely different, the feedback loop is the same. If you spend more, you get more care; if you police more, you make more arrests. And the cycle goes on. Note that in both cases, a major part of the problem was also the use of proxies for metrics: cost as a proxy for health, police reports a proxy for crime, not dissimilar to the use of Youtube view-time as a proxy for what a viewer wants to watch (for more on metrics and proxies, we highly recommend the post The problem with metrics is a big problem for AI by Rachel Thomas, Director of the Center for Applied Data Ethics at USF). There are also interaction effects between many models deployed in society that mean they feedback into each other: those most likely to be treated unfairly by the healthcare algorithm are more likely to be discriminated against by models used in employment hiring flows and more likely to be targeted by predatory payday loan ads online, as detailed by Cathy O’Neil in Weapons of Math Destruction.

Google search operates at another scale of networked feedback, that of everybody. When I search for “Artificial Intelligence,” the results aren’t only a function of what Google knows about me, but also of how successful each link has been for everybody that has seen it previously. Google Search also operates in a fundamentally different way to many modern recommendation systems: historically, it has optimized its results to get you off its platform, though recently its emphasis has shifted. Whereas so many tech companies optimize for engagement with their platforms, trying to keep you from going elsewhere, Google’s incentive with Search was to direct you to another site, most often for the purpose of discovering facts. Under this model, there is an argument that the incentives of Google, advertisers, and users were all aligned, at least when searching for basic facts: all three stakeholders want to get the right fact in front of the user, at least in theory. This is why Search weighs long clicks more heavily than short clicks (the longer the time before the user clicks back to Google, the better). Now that Google has shifted to providing answers to questions rather than links to answers, they are valuing engagement with their platform over engagement with other advertisers; as an advertiser, you’re more likely to succeed if you advertise directly on Google’s result page. Even more recently, Google announced its incorporation of BERT (Bidirectional Encoder Representations from Transformers, a technology enabling “anyone to train their own state-of-the-art question answering system”) into Search, which will allow users to make more complex and conversational queries and will enable you to “search in a way that feels natural for you” (according to Google, this is “one of the biggest leaps forward in the history of Search”). Fundamental changes in Search to encourage more complex queries could result in a shift of incentives.

Also, this theoretical alignment of incentives between Google, advertisers, and users is an idealization. In practice, Google search encodes all types of cultural and societal biases, such as racial discrimination, as investigated in Safiya Noble’s Algorithms of Oppression. An example of this is that, for many years, when using Google image search with the keyword “beautiful,” the results would be dominated by photos of white women. In the words of Ruha Benjamin, Associate Professor of African American Studies at Princeton University, “race and technology are co-produced.”

A final word (for now) on developing healthy Google Search habits and practices: know that the SEO (Search Engine Optimization) industry is worth close to $80 billion and that the way you’re served results and the potential mis-alignment of incentives depends on whether your search is informational (searching for information, such as “Who was the 44th President of the United States?”), navigational (searching for a particular website, such as “Wikipedia”), or transactional (searching to buy something, such as “Buy Masterclass subscription”). Keep a skeptical mind about the results you’re served! Personalization of search results may be handy in the short-term. However, when making informational searches, you’re being served what you regularly assume is ground truth but is tailored to you, based on what Google already knows about your online and, increasingly, offline behavior. There is also an information asymmetry, in that you don’t know what Google knows about you, and how that information plays into the incentives of Google’s ad-based business model. For informational searches, this could be quite disturbing. As Jaron Lanier points out in Ten Arguments for Deleting Your Social Media Accounts Right Now, how would you feel if Wikipedia showed you and I different histories, based on our respective browser activities? To take this a step further, what if Wikipedia tailored the “facts” served to us as a function of an ad-based business model?

For advertisers, incentive systems are also strangely skewed. We recently searched for Stitch Fix, the online personal styling service. This is a basic navigational search and Google could easily have served us the Stitch Fix website and they did, but above it were two advertisements: the first one was for Stitch Fix and the second one was for Trunk Club, a Stitch Fix competitor. This means that Trunk Club is buying ads for the keywords of their competitor, a common practice, and Stitch Fix then had to engage in defensive advertising due to how much traffic Google Search has, even when the user is clearly looking for their product! As a result, the user sees only ads above the scroll (at least on a cell phone) and needs to scroll down to find the correct and obvious search result. There is an argument that, if a user is explicitly searching to buy a product, it should be illegal for Google to force the product in question into defensive advertising.

TOWARDS A TYPOLOGY OF MODEL IMPACT AND EFFECTS

YouTube, the Facebook feed, Google Search, and Twitter are examples of modern algorithms and models that alter our perceptions of reality; applications like predictive policing reflect biased perceptions of reality that may have little to do with actual reality–indeed, these models create their own realities, becoming self-fulfilling prophecies. They all operate in different ways and on different principles. The nature of the feedback loops, the resulting phenomena, and the alignment of incentives between user, platform, content providers and advertisers are all different. In a world that’s increasingly filled with models, we need to assess their impact, identify challenges and concerns, and discuss and implement paths in the solution space.

This first attempt at a model impact and effect classification probed several models that are part of our daily lives by looking at the nature of their feedback loops and the alignment of incentives between stakeholders (model builders, users, and advertisers). Other key dimensions to explore include “creep” factor, “hackability” factor, and how networked the model itself is (is it constantly online and re-trained?). Such a classification will allow us to assess the potential impact of classes of models, consider how we wish to interact with them, and to propose paths forward. This work is part of a broader movement of users, researchers, and builders who are actively engaged in discovering and documenting how these models work, are deployed, and what their impacts are. If you are interested in exploring this space, we encourage you to check out the non-exhaustive reading list below.

***

The authors would like to thank Shira Mitchell for valuable feedback on an early draft of this essay and Manny Moss for valuable feedback on a late draft.

READING LIST

- Model Cards for Model Reporting by Mitchell et al.

- Datasheets for Datasets by Gerbu et al.

- Data Statements for Natural Language Processing: Toward Mitigating System Bias and Enabling Better Science by Bender & Friedman

- Anatomy of an AI System by Crawford and Joler

- Algorithms of Oppression by Safiya Umoja Noble

- Weapons of Math Destruction by Cathy O’Neil

- Race after Technology by Ruha Benjamin

- Automating Inequality by Virginia Eubanks

- Twitter and Tear Gas by Zeynep Tufekci

- It’s Complicated: The Social Lives of Networked Teens by danah boyd

- Ten Arguments for Deleting Your Social Media Accounts Right Now by Jaron Lanier

- Ruined by Design by Mike Monteiro

- AI Now Report 2018 by Whittaker et al.

- Owning Ethics: Corporate Logics, Silicon Valley, and the Institutionalization of Ethics by Jacob Metcalf, Emanuel Moss, and danah boyd (Data & Society)

- 21 Fairness Definitions and Their Politics, a tutorial by Arvind Narayanan at FAT* 2018

- Prediction-Based Decisions and Fairness: A Catalogue of Choices, Assumptions, and Definitions by Mitchell et al.

- The problem with metrics is a big problem for AI by Rachel Thomas

- Ethical Principles, OKRs, and KPIs: what YouTube and Facebook could learn from Tukey by Chris Wiggins

- Algorithmic Accountability: A Primer by Caplan et al. (Data & Society)

- The Digital Defense Playbook by Our Data Bodies

- The Algorithmic Justice League