Pair Programming with AI

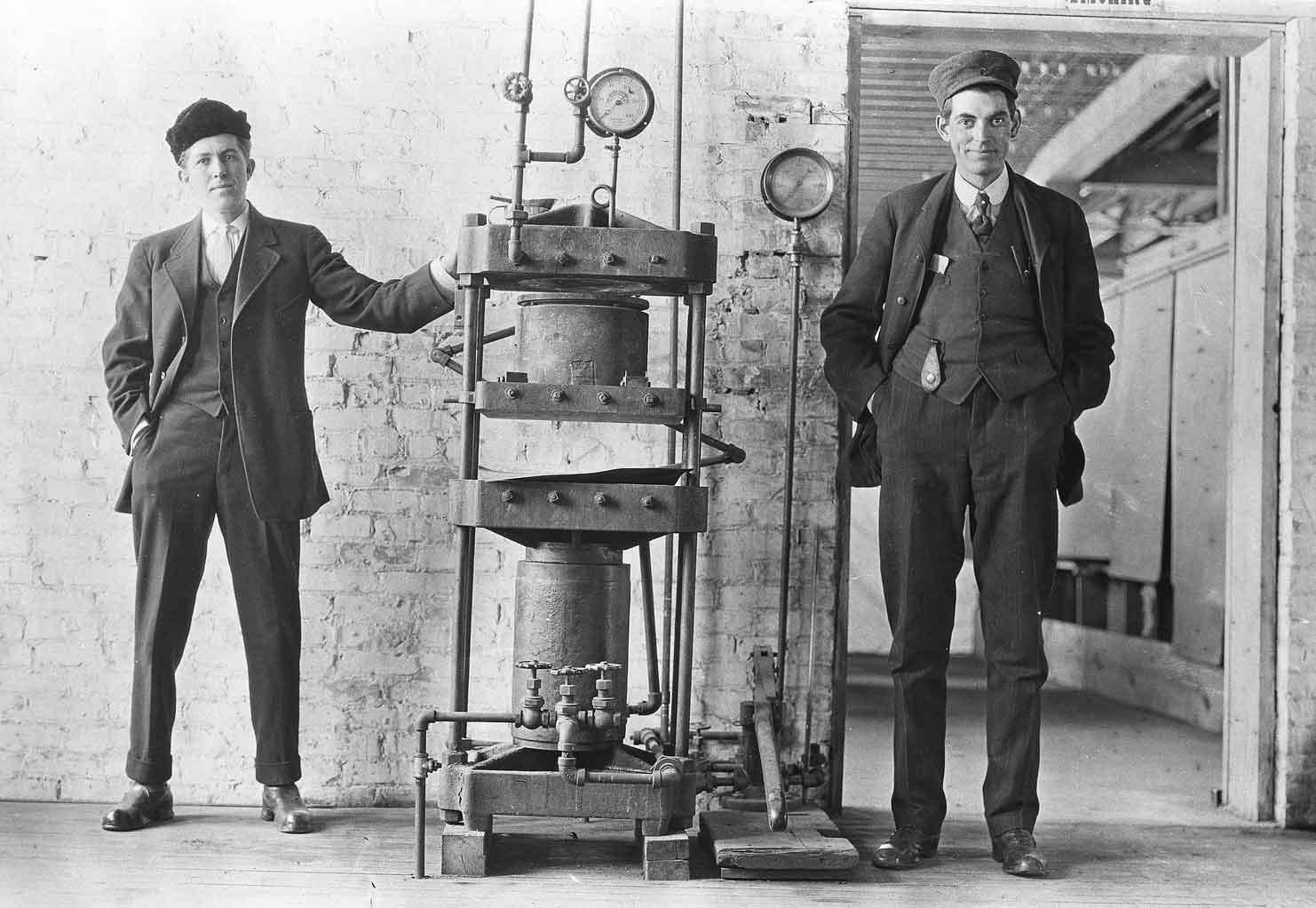

C. Vulcanized Products Co, Muskegon, Michigan, 1911 (source: Community Archives via Flickr)

C. Vulcanized Products Co, Muskegon, Michigan, 1911 (source: Community Archives via Flickr)

In a conversation with Kevlin Henney, we started talking about the kinds of user interfaces that might work for AI-assisted programming. This is a significant problem: neither of us were aware of any significant work on user interfaces that support collaboration. However, as software developers, many of us have been practicing effective collaboration for years. It’s called pair programming, and it’s not at all like the models we’ve seen for interaction between an AI system and a human.

Most AI systems we’ve seen envision AI as an oracle: you give it the input, it pops out the answer. It’s a unidirectional flow from the source to the destination. This model has many problems; for example, one reason medical doctors have been slow to accept AI may be that it’s good at giving you the obvious solution (“that rash is poison ivy”), at which point the doctor says “I knew that…” Or it gives you a different solution, to which the doctor says “That’s wrong.” Doctors worry that AI will “derail clinicians’ conversations with patients,” hinting that oracles are unwelcome in the exam room (unless they’re human).

Shortly after IBM’s Watson beat the world Jeopardy champions, IBM invited me to see a presentation about it. For me, the most interesting part wasn’t the Jeopardy game Watson played against some IBM employees; it was when they showed the set of answers Watson considered before selecting its answer, weighted with their probabilities. That level was the real gold. We don’t need an AI system to tell us something obvious, or something we can Google in a matter of seconds. We need AI when the obvious answer isn’t the right one, and one of the possible but rejected answers is.

What we really need is the ability to have a dialog with the machine. We still don’t have the user interface for that. We don’t need complete, perfect solutions; we need partial solutions in situations where we don’t have all the information, and we need the ability to explore those solutions with an (artificially) intelligent partner. What is the logic behind the second, third, fourth, and fifth solutions? If we know the most likely solution is wrong, what’s next? Life is not like a game of Chess or Go—or, for that matter, Jeopardy.

What would this look like? One of the most important contributions of Extreme Programming and other Agile approaches was that they weren’t unidirectional. These methodologies stressed iteration: building something useful, demo-ing it to the customer, taking feedback, and then improving. Compared to Waterfall, Agile gave up on the master plan and specification that governed the project’s shape from start to finish, in favor of many mid-course corrections.

That cyclic process, which is about collaboration between software developers and customers, may be exactly what we need to get beyond the “AI as Oracle” interaction. We don’t need a prescriptive AI writing code; we need a round trip, in which the AI makes suggestions, the programmer refines those suggestions, and together, they work towards a solution.

That solution is probably embedded in an IDE. Programmers might start with a rough description of what they want to do, in an imprecise, ambiguous language like English. The AI could respond with a sketch of what the solution might look like, possibly in pseudo-code. The programmer could then continue by filling in the actual code, possibly with extensive code completion (and yes, based on a model trained on all the code in GitHub or whatever). At this point, the IDE could translate the programmer’s code back into pseudo-code, using a tool like Pseudogen (a promising new tool, though still experimental). Any writer, whether of prose or of code, knows that having someone tell you what they think you meant does wonders for revealing your own lapses in understanding.

MISIM is another research project that envisions a collaborative role for AI. It watches the code that a developer is writing, extracting its meaning and comparing it with similar code. It then makes suggestions about rewriting code that looks buggy or inefficient, based on the structure of similar programs. Although its creators suggest that MISIM could eventually lead to machines that program themselves, that’s not what interests me; I’m more interested in the idea that MISIM is helping a human to write better code. AI is still not very good at detecting and fixing bugs; but it is very good at asking a programmer to think carefully when it looks like something is wrong.

Is this pair programming with a machine? Maybe. It is definitely enlisting the machine as a collaborator, rather than as a surrogate. The goal isn’t to replace the programmers, but to make programmers better, to work in ways that are faster and more effective.

Will it work? I don’t know; we haven’t built anything like it yet. It’s time to try.